Filter by type:

Filter by tags:

arch-function-1.5b

The Katanemo Arch-Function collection of large language models (LLMs) is a collection state-of-the-art (SOTA) LLMs specifically designed for function calling tasks. The models are designed to understand complex function signatures, identify required parameters, and produce accurate function call outputs based on natural language prompts. Achieving performance on par with GPT-4, these models set a new benchmark in the domain of function-oriented tasks, making them suitable for scenarios where automated API interaction and function execution is crucial.

In summary, the Katanemo Arch-Function collection demonstrates:

State-of-the-art performance in function calling

Accurate parameter identification and suggestion, even in ambiguous or incomplete inputs

High generalization across multiple function calling use cases, from API interactions to automated backend tasks.

Optimized low-latency, high-throughput performance, making it suitable for real-time, production environments.

Repository: localaiLicense: apache-2.0

arch-function-7b

The Katanemo Arch-Function collection of large language models (LLMs) is a collection state-of-the-art (SOTA) LLMs specifically designed for function calling tasks. The models are designed to understand complex function signatures, identify required parameters, and produce accurate function call outputs based on natural language prompts. Achieving performance on par with GPT-4, these models set a new benchmark in the domain of function-oriented tasks, making them suitable for scenarios where automated API interaction and function execution is crucial.

In summary, the Katanemo Arch-Function collection demonstrates:

State-of-the-art performance in function calling

Accurate parameter identification and suggestion, even in ambiguous or incomplete inputs

High generalization across multiple function calling use cases, from API interactions to automated backend tasks.

Optimized low-latency, high-throughput performance, making it suitable for real-time, production environments.

Repository: localaiLicense: apache-2.0

arch-function-3b

The Katanemo Arch-Function collection of large language models (LLMs) is a collection state-of-the-art (SOTA) LLMs specifically designed for function calling tasks. The models are designed to understand complex function signatures, identify required parameters, and produce accurate function call outputs based on natural language prompts. Achieving performance on par with GPT-4, these models set a new benchmark in the domain of function-oriented tasks, making them suitable for scenarios where automated API interaction and function execution is crucial.

In summary, the Katanemo Arch-Function collection demonstrates:

State-of-the-art performance in function calling

Accurate parameter identification and suggestion, even in ambiguous or incomplete inputs

High generalization across multiple function calling use cases, from API interactions to automated backend tasks.

Optimized low-latency, high-throughput performance, making it suitable for real-time, production environments.

Repository: localaiLicense: apache-2.0

mistral-7b-instruct-v0.3

The Mistral-7B-Instruct-v0.3 Large Language Model (LLM) is an instruct fine-tuned version of the Mistral-7B-v0.3.

Mistral-7B-v0.3 has the following changes compared to Mistral-7B-v0.2

Extended vocabulary to 32768

Supports v3 Tokenizer

Supports function calling

Repository: localaiLicense: apache-2.0

mistral-nemo-instruct-2407

The Mistral-Nemo-Instruct-2407 Large Language Model (LLM) is an instruct fine-tuned version of the Mistral-Nemo-Base-2407. Trained jointly by Mistral AI and NVIDIA, it significantly outperforms existing models smaller or similar in size.

Repository: localaiLicense: apache-2.0

lumimaid-v0.2-12b

This model is based on: Mistral-Nemo-Instruct-2407

Wandb: https://wandb.ai/undis95/Lumi-Mistral-Nemo?nw=nwuserundis95

NOTE: As explained on Mistral-Nemo-Instruct-2407 repo, it's recommended to use a low temperature, please experiment!

Lumimaid 0.1 -> 0.2 is a HUGE step up dataset wise.

As some people have told us our models are sloppy, Ikari decided to say fuck it and literally nuke all chats out with most slop.

Our dataset stayed the same since day one, we added data over time, cleaned them, and repeat. After not releasing model for a while because we were never satisfied, we think it's time to come back!

Repository: localaiLicense: apache-2.0

mn-12b-celeste-v1.9

Mistral Nemo 12B Celeste V1.9

This is a story writing and roleplaying model trained on Mistral NeMo 12B Instruct at 8K context using Reddit Writing Prompts, Kalo's Opus 25K Instruct and c2 logs cleaned

This version has improved NSFW, smarter and more active narration. It's also trained with ChatML tokens so there should be no EOS bleeding whatsoever.

Repository: localaiLicense: apache-2.0

pantheon-rp-1.6-12b-nemo

Welcome to the next iteration of my Pantheon model series, in which I strive to introduce a whole collection of personas that can be summoned with a simple activation phrase. The huge variety in personalities introduced also serve to enhance the general roleplay experience.

Changes in version 1.6:

The final finetune now consists of data that is equally split between Markdown and novel-style roleplay. This should solve Pantheon's greatest weakness.

The base was redone. (Details below)

Select Claude-specific phrases were rewritten, boosting variety in the model's responses.

Aiva no longer serves as both persona and assistant, with the assistant role having been given to Lyra.

Stella's dialogue received some post-fix alterations since the model really loved the phrase "Fuck me sideways".

Your user feedback is critical to me so don't hesitate to tell me whether my model is either 1. terrible, 2. awesome or 3. somewhere in-between.

Repository: localaiLicense: apache-2.0

mn-backyardai-party-12b-v1-iq-arm-imatrix

This is a group-chat based roleplaying model, based off of 12B-Lyra-v4a2, a variant of Lyra-v4 that is currently private.

It is trained on an entirely human-based dataset, based on forum / internet group roleplaying styles. The only augmentation done with LLMs is to the character sheets, to fit to the system prompt, to fit various character sheets within context.

This model is still capable of 1 on 1 roleplay, though I recommend using ChatML when doing that instead.

Repository: localaiLicense: apache-2.0

ml-ms-etheris-123b

This model merges the robust storytelling of mutiple models while attempting to maintain intelligence. The final model was merged after Model Soup with DELLA to add some specal sause.

- model: NeverSleep/Lumimaid-v0.2-123B

- model: TheDrummer/Behemoth-123B-v1

- model: migtissera/Tess-3-Mistral-Large-2-123B

- model: anthracite-org/magnum-v2-123b

Use Mistral, ChatML, or Meth Format

Repository: localaiLicense: apache-2.0

mn-lulanum-12b-fix-i1

This model was merged using the della_linear merge method using unsloth/Mistral-Nemo-Base-2407 as a base.

The following models were included in the merge:

VAGOsolutions/SauerkrautLM-Nemo-12b-Instruct

anthracite-org/magnum-v2.5-12b-kto

Undi95/LocalC-12B-e2.0

NeverSleep/Lumimaid-v0.2-12B

Repository: localaiLicense: apache-2.0

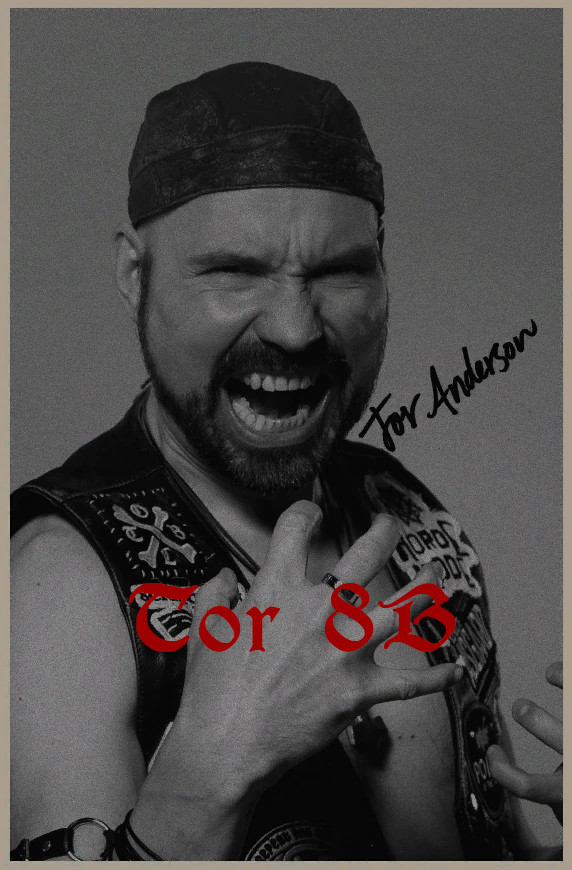

tor-8b

An earlier checkpoint of Darkens-8B using the same configuration that i felt was different enough from it's 4 epoch cousin to release, Finetuned ontop of the Prune/Distill NeMo 8B done by Nvidia, This model aims to have generally good prose and writing while not falling into claude-isms.

Repository: localaiLicense: apache-2.0

darkens-8b

This is the fully cooked, 4 epoch version of Tor-8B, this is an experimental version, despite being trained for 4 epochs, the model feels fresh and new and is not overfit, This model aims to have generally good prose and writing while not falling into claude-isms, it follows the actions "dialogue" format heavily.

Repository: localaiLicense: apache-2.0

Page 1